| Version 5 (modified by , 13 years ago) (diff) |

|---|

Multi-Party Experiment Creation Example

This example walks through creating a multiparty experiment from a single experiment topology description. That description may have been created from a compostion program or created by hand. We describe the key elements of the experiment description and walk through the creation.

Experiment Description

The ns-2 description of our example is (topdl version is attached)

# SERVICE: project_export:deter/a::project=TIED # SERVICE: project_export:deter/b::project=SAFER # SERVICE: seer_master:deter/a:deter/a,deter/b # SERVICE: local_seer_control:deter/a # SERVICE: local_seer_control:deter/b set ns [new Simulator] source tb_compat.tcl set a [$ns node] set b [$ns node] set c [$ns node] set d [$ns node] set e [$ns node] set deter_os "Ubuntu1004-STD" set seer_tar "/users/faber/seer-deploy/seer-u10-current.tgz" tb-set-node-os $a $deter_os tb-set-node-testbed $a "deter/a" tb-set-node-tarfiles $a "/usr/local/" $seer_tar tb-set-node-startcmd $a "sudo python /usr/local/seer/experiment-setup.py Basic" tb-set-node-os $b $deter_os tb-set-node-testbed $b "deter/a" tb-set-node-tarfiles $b "/usr/local" $seer_tar tb-set-node-startcmd $b "sudo python /usr/local/seer/experiment-setup.py Basic" tb-set-node-os $c $deter_os tb-set-node-testbed $c "deter/b" tb-set-node-tarfiles $c "/usr/local" $seer_tar tb-set-node-startcmd $c "sudo python /usr/local/seer/experiment-setup.py Basic" tb-set-node-os $d $deter_os tb-set-node-testbed $d "deter/b" tb-set-node-tarfiles $d "/usr/local" $seer_tar tb-set-node-startcmd $d "sudo python /usr/local/seer/experiment-setup.py Basic" tb-set-node-os $e $deter_os tb-set-node-testbed $e "deter/b" tb-set-node-tarfiles $e "/usr/local" $seer_tar tb-set-node-startcmd $e "sudo python /usr/local/seer/experiment-setup.py Basic" set link0 [ $ns duplex-link $a $b 100Mb 0ms DropTail] set link1 [ $ns duplex-link $c $b 100Mb 0ms DropTail] set lan0 [ $ns make-lan "$c $d $e" 100Mb 0ms ] $ns rtproto Static $ns run

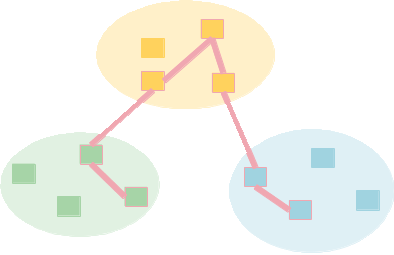

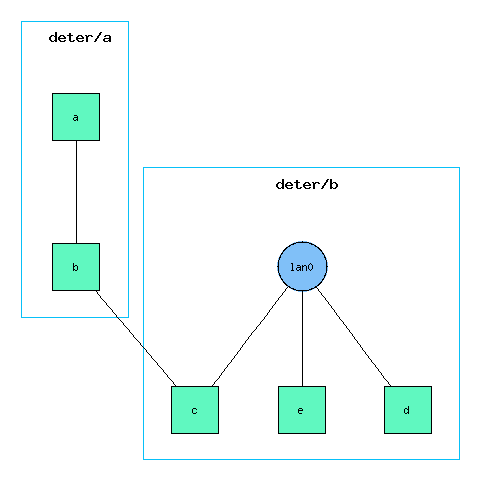

This is an experiment topology containing 5 computers connected mostly by links, but with a single LAN. The computers are named with the letters a-e, and allocated to 2 parties. The party names appear in the tb-set-node-testbed commands (and as testbed attributes in the topdl). Overall the topology looks like (generated by fedd_image.py --group testbed --file multi-swap-example.xml --out multi.png):

The individual topologies are configured using the SERVICE directives in the comments of the ns-2 description. They can also be given on the command line of fedd_create.py using --service. Currently that is the only way to specify them when starting a topdl experiment. The format of the line and the --service option are the same. Services in general are described elsewhere as well.

Briefly, the first two lines set the DETER project that will be used to configure each party's area. The deter/a area will be an experiment owned by the TIED project; deter/b will be configured by the SAFER project. The project_export service exports the user configuration and filesystem named by the project (project=TIED) attribute.

The next three lines configure the SEER experiment control system. The local_seer service will add a node named control to each experiment that is effictively invisible outside the party's area. Computers will treat that as a SEER controller, and experimenters can connect to it directly to see and manipulate their areas. The seer_master service adds a node named seer-master to the experiment that each area's controller will also connect to. Connecting to the seer-master controller allows thw whole experiment to be viewed and manipulated.

Creating the Experiment

To create the experiment:

fedd_create.py --file multi-swap-example.tcl --experiment_name=faber-multi

You can provide any identifier you like for the experiment name.

This will return pretty shortly (though not instantly) with output like this:

localname: faber-multi fedid: b4339d8a339097e87ed5624a36b584fe95b0ea0d status: starting

The fedid is the unique name for the experiment, and the localname is a human-readable nickname. It is derived from the suggested experiment name passes to fedd_create.py. Generally the name passed is the one used, but if there is a name collision, fedd disambiguates. Finally the status is given. "Starting" means that fedd is constructing the experiment. This is generally a process that takes 10 minutes or so, though troubles with individual nodes can make it take longer.Fedd simultaneously swaps a separate experiment in for each sub experiment.

You can check on the progress of the experiment using fedd_multistatus.py which prints a brief status for each federated experiment:

$ fedd_multistatus.py faber-multi:b4339d8a339097e87ed5624a36b584fe95b0ea0d:starting

When the experiment is active:

$ fedd_multistatus.py faber-multi:b4339d8a339097e87ed5624a36b584fe95b0ea0d:active

Experiments can also be "failed" which means it will not come up, and a couple other self-explanatory states.

Because creation takes a while, users want to see what's going on, even if it is somewhat cryptic. There are several ways to do this. The fedd_spewlog.py command prints the fedd swapin log to stdout and terminates when the experiment fails or activates. For example:

$ fedd_spewlog.py --experiment_name faber-multi 21 Sep 11 13:57:10 fedd.experiment_control.faber-multi Calling StartSegment at https://users.isi.deterlab.net:23233 21 Sep 11 13:57:10 fedd.experiment_control.faber-multi Calling StartSegment at https://users.isi.deterlab.net:23231 21 Sep 11 13:57:10 fedd.experiment_control.faber-multi Calling StartSegment at https://users.isi.deterlab.net:23231 21 Sep 11 13:57:10 fedd.experiment_control.faber-multi Calling StartSegment at https://users.isi.deterlab.net:23233 Allocated vlan: 3808Allocated vlan: 380921 Sep 11 13:58:10 fedd.experiment_control.faber-multi Waiting for sub threads (it has been 1 mins) 21 Sep 11 13:59:10 fedd.experiment_control.faber-multi Waiting for sub threads (it has been 2 mins) 21 Sep 11 14:00:10 fedd.experiment_control.faber-multi Waiting for sub threads (it has been 3 mins) 21 Sep 11 14:01:10 fedd.experiment_control.faber-multi Waiting for sub threads (it has been 4 mins) 21 Sep 11 14:02:10 fedd.experiment_control.faber-multi Waiting for sub threads (it has been 5 mins) 21 Sep 11 13:57:17 fedd.access.faber-multi-a State is swapped 21 Sep 11 13:57:17 fedd.access.faber-multi-a [cmd_with_timeout]: /bin/rm -rf /proj/TIED/exp/faber-multi-a/tmp 21 Sep 11 13:57:17 fedd.access.faber-multi-a [cmd_with_timeout]: /bin/rm -rf /proj/TIED/software/faber-multi-a/* 21 Sep 11 13:57:17 fedd.access.faber-multi-a [cmd_with_timeout]: mkdir -p /proj/TIED/exp/faber-multi-a/tmp 21 Sep 11 13:57:17 fedd.access.faber-multi-a [cmd_with_timeout]: mkdir -p /proj/TIED/software/faber-multi-a 21 Sep 11 13:57:18 fedd.access.faber-multi-a [modify_exp]: Modifying faber-multi-a 21 Sep 11 13:57:34 fedd.access.faber-multi-a [modify_exp]: Modify succeeded 21 Sep 11 13:57:34 fedd.access.faber-multi-a [swap_exp]: Swapping faber-multi-a in 21 Sep 11 14:02:43 fedd.access.faber-multi-a [swap_exp]: Swap succeeded 21 Sep 11 14:02:43 fedd.access.faber-multi-a [get_mapping] Generating mapping 21 Sep 11 14:02:43 fedd.access.faber-multi-a Mapping complete21 Sep 11 14:03:10 fedd.experiment_control.faber-multi Waiting for sub threads (it has been 6 mins) 21 Sep 11 14:04:10 fedd.experiment_control.faber-multi Waiting for sub threads (it has been 7 mins) 21 Sep 11 14:05:10 fedd.experiment_control.faber-multi Waiting for sub threads (it has been 8 mins)

Once the DETER experiments begin to swap in, you can watch the activity logs on the DETER web interface to see what the individual experiments are doing. In this example, you would look at SAFER/faber-multi-b and TIED/faber-multi-a. The projects come from knowing the projects to which each sub-part was assigned; the experiment names come from the log.

The fedd logs are also world-readable on users.isi.deterlab.net. To watch a multi-party swap-in, the following logs may be interesting:

/var/log/fedd.log # the main experiment controller log /var/log/fedd.deter.log # the log for the DETER testbed access controller (the component swapping experiments) /var/log/fedd.internal.log # the log for the access controller handing out VLANs to interconnect on DETER

The easiest way to view them is:

$ tail -f /var/log/fedd.log

Experiment Status

Once the experiment is started you can look at and control the individual pieces using standard DETER interfaces. You can get the topology from the fedd_ftopo.py command.

$ fedd_ftopo.py --experiment_name faber-multi d:pc027.isi.deterlab.net:deter/b e:pc020.isi.deterlab.net:deter/b a:pc009.isi.deterlab.net:deter/a b:pc044.isi.deterlab.net:deter/a c:pc022.isi.deterlab.net:deter/b

You can manipulate the various parts by logging into the machines or using SEER.

Attachments (3)

- multi-swap-example.tcl (1.5 KB) - added by 13 years ago.

- multi-swap-example.xml (5.2 KB) - added by 13 years ago.

-

multi.png (2.3 KB) - added by 13 years ago.

Basic image of the experiment

Download all attachments as: .zip