| Version 10 (modified by , 11 years ago) (diff) |

|---|

The DETER Federation Architecture (DFA)

This is a basic overview of the DETER federation system. More details on this implementation and configuration are in later documents. You can also read these academic papers that capture the evolution of the design to this point.

The DFA is a system that allows a researcher to construct experiments that span testbeds by dynamically acquiring resources from other testbeds and configuring them into a single experiment. As closely as possible that experiment will mimic a single DETER/Emulab experiment.

Though the experiment appears to be a cohesive whole, the testbeds that loan the resources retain control of those resources. Because testbeds retain this control, each testbed may issue credentials necessary for manipulating the federated resources. For example, a testbed that has loaned nodes to an experiment may require the experimenter to present a credential issued by that testbed (e.g., an SSH key or SSL certificate) to reboot those nodes. The system acquires those credentials on behalf of experimenters and distributes them on behalf of testbeds.

Testbed administrators may use the system to establish regular policies between testbeds to share resources across many users of a testbed. Similarly, a single user with accounts on multiple testbeds can use the same interfaces to coordinate experiments that share his testbed resource, assuming sharing those resources does not violate the policy of any of the constituent testbeds.

Federated Experiments

A researcher may build a federated experiment to

- Connect resources from testbeds with different focuses - e.g., connect power system resources with networking resources

- Connect resource owned by different actors for collaboration or competition

- Connect a local workstation environment to an experiment to monitor or demonstrate the results of an experiment.

An all of these cases, a researcher specifies the resources and their interconnections to the DFA, which coordinates between the testbeds and connects the resources.

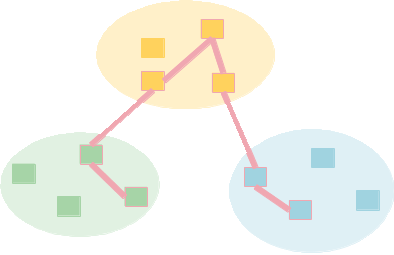

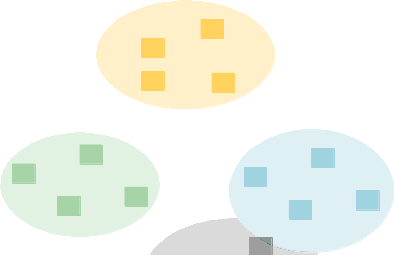

Consider a researcher who has access to several testbeds and wants to interconnect resources from those testbeds.

From disparate testbeds, the DETER Federation System will create a single unified environment.

Combining Specialized Resources

The most common reason to create a federated experiment is to build an experimental environment that combines the specialties of multiple institutions.

For example, DETER researchers have used the federation system to interconnect electrical power systems, high speed power simulation, a controlled IP network and a situational awareness display sited at three disparate research labs into a coherent whole. This example shows a federation between DETER, tyhe University of Illinois and the Pacific Northwest National Laboratory (PNNL).

The federation system handles the internetworking of those resources, allowing the researchers to combine expertise.

Collaboration and Competition

Another use of federation is to construct experiments where different reserachers have different levels of access to different parts of the overall experiment. Experimenters preparing defenses can be linked to experimenters preparing attacks and to a shared environment unknown to both of them. The result is a controlled interaction between groups of experiment elements.

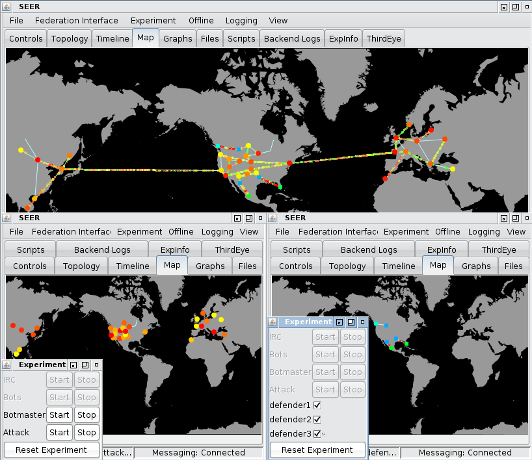

DETER researchers have interconnected attackers trying to spread an distributed denial of service (DDoS) attack using a worm with a defender that deploys multiple service access points in various large networks using this technology. A simple example of a visualization of this situation appears below.

Integrating Researcher Desktops

Often a researcher will want to directly interconnect their desktop or another computer into an experimental environment. This can enable an experimenter to debug effectively by importing their tools and environment easily, or to demonstrate the experiment to others by incorporating a display engine.

This is a special case of combining specialized resources, but the lightweight system DETER uses to facilitate this is of particular interest.

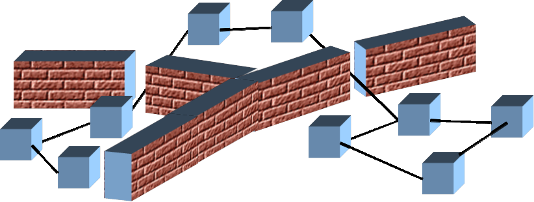

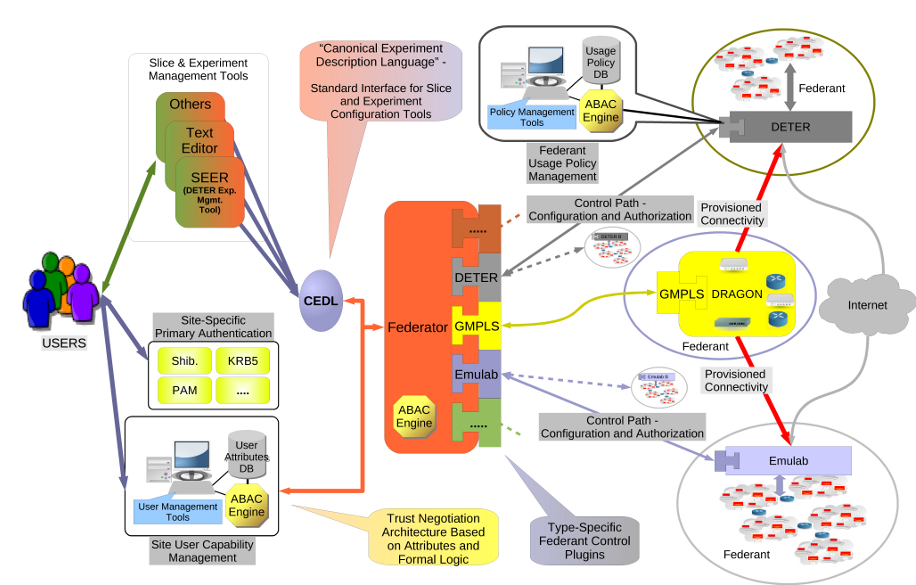

The Software Architecture

The overall architecture of the DFA is shown below. Users specify their experiment environments to a system that coordinates access to multiple testbeds/resource providers. Each testbed may use different interfaces and have different policies for access.

The plug-in system simplifies integration of different testbed interfaces to the DFA. Each plug-in translates from the local testbed's interface into a standard interface.

The Attribute-based Access Control (ABAC) authorization logic and some associated DFA translation technology allows different policies to mesh. ABAC is a powerful, well-specified authentication logic that can encode a variety of policies. It allows sophisticated delegation and other access control rules to be expressed interoperably. It is in use both in the DFA and in the GENI testbed.

In principle, testbeds can use the full power of ABAC to describe their policies, but in practice DFA tools allow simple configuration of access control for most testbeds.

The function of the Federator in the diagram above is divided into two parts:

- Experiment controller

- Presents the abstraction of a federated experiment to the users. Allows allocation, configuration, and release of resources to the federated experiment.

- Access Controller

- Negotaties access and allocation of resources from federant testbeds.

The access controller is depicted by the regular plug-in shapes in the federator. These are standard plug-in interfaces that allow standard access to a variety of testbed types. Currently emulab style testbeds and DRAGON style transit networks are supported. The regular system interface to fedd allows new systems to be added easily.

Depending on the nature of the access controller it may be implemented on the local testbed, on the same host as the experiment controller (even in the same process) or partially in both places. This depends on the access mechanisms of the underlying testbed and the policies of the administrators. We have implemented plugins for Emulab-style testbeds that run completely remotely and access the testbed through ssh and plug-ins that run on the Emulab testbed directly and communicate outside the testbed entirely through fedd interfaces. Access controllers that run directly on the remote testbed have more discretion in executing privledged local commands than remote access controllers.

The access controller/experiment controller interface is documented.

The Experiment Controller

A researcher creating a federated experiment uses our federation tools to talk directly to an experiment controller. Commonly DETER users talk to DETER's experiment controller. While experiment controllers can run on any testbed - in fact each user is welcome to run their own controller - using DETER's controller leverages the trust other testbeds place in that controller and the information it has gathered.

The experiment controller is responsible for getting access to resources at the various testbeds, creating the sub-topologies that make up the federated experiment, and interconnecting them. As the experiment runs, the experiment controller can monitor and

Fedd: The DETER Federation Daemon

Fedd is the name for the codebase that supports both the access controller and experiment controller function. Generally any instance of fedd will be acting as either an experiment controller or an access controller. We continue to move toward reflecting the distinction in function in the codebase, but this is not complete.

Generally fedd is configured by the testbed's administration to allow controlled sharing of the resources, though the same software and interfaces can be used by single users to coordinate federated resources.

Creating a federated experiment consists of breaking an annotated experiment into sub-experiments, gaining access to testbeds that can host those experiments, and coordinating the creation and connection of those sub-experiments. Fedd insulates the user from the various complexities of creating sub-experiments and dealing with cleanup and partial failures. Once an experiment is created, fedd returns credentials necessary to administer the experiment.

On termination, fedd cleans up the various sub-experiments and deallocates any dynamic resources or credentials.

Access Control

This section describes the basic model of access control that fedd implements. We start by explaining how users are represented between fedds and then describe the process of acquiring and releasing access to a testbed's resources.

This section documents the current access control system of fedd. It is undergoing development.

Global Identifiers: Fedids

In order to avoid collisions between local user names the DFA defines a global set of names called DETER federation IDs, or more concisely fedids. A fedid has two properties:

- An entity claiming to be named by a fedid can prove it interactively

- Two entities referring to the same fedid can be sure that they are referring to the same entity

An example implementation of a fedid is an RSA public key. An entity claiming to be identified by a given public key can prove it by responding correctly to a challenge encrypted by that key. For a large enough key size, collisions are rare enough that the second property holds probabilistically.

We adopt public keys as the basis for DFA fedids, but rather than tie ourselves to one public key format, we use subject key identifiers as derived using method (1) in RFC3280. That is, we use the 160 bit SHA-1 hash of the public key. While this allows a slightly higher chance of collisions than using the key itself, the advantage of a single representation in databases and configuration files outweighs the increase in risk for this application.

Global Identifiers: Three-level Names

In Emulab, projects are created by users within projects and those attributes determine what resources can be accessed. We generalized this idea into a testbed, project, user triple that is used for access control decisions. A requester identified as ("DETER", "proj1", "faber") is a user from the DETER testbed, proj1 project, user faber. Testbeds contain projects and users, projects contain users, and users do not contain anything.

Parts of the triple can be left blank: ( , ,"faber") is a user named faber without a testbed or project affiliation.

Though the examples used above are simple strings, the outermost (i.e., leftmost) non-blank field of a name must be a fedid to be valid, and are treated as the entity asserting the name. Contained fields can either be fedids or local names. This allows a testbed to continue to manage its namespace locally.

If DETER has fedid:1234 then the name (fedid:1234, "proj1", "faber") refers to the DETER user faber in proj1, if and only if the requester can prove they are identified by fedid:1234. Note that by making decisions on the local names a testbed receiving a request is trusting the requesting testbed about the membership of those projects and names of users.

Testbeds make decisions about access based on these three level names. For example, any user in the "emulab-ops" project of a trusted testbed may be granted access to federated resources. It may also be the case that any user from a trusted testbed is granted some access, but that users from the emulab-ops project of that testbed are granted access to more kinds of resources.

These three level names are used only for testbed access control. All other entities in the system are identified by a single fedid.

Granting Access

An access controller is responsible for mapping from the global identifier space into the local access control of the testbed This may mean mapping a request into a local Emulab project and user, a local DRAGON certificate, or a local ProtoGENI certificate. As other plugins are added other mappings will appear

Emulab Projects

Once an access controller managing an Emulab has decided to grant a researcher access to resources, it implements that decision by granting the researcher access to an Emulab project with relevant permissions on the local testbed. The terminology is somewhat unfortunate in that the access controller is configured to grant access based on the global three-level name that includes project and user components and implements that decision by granting access to a local Emulab project and Emulab user.

The Emulab project to which the fedd grants access may exist and contain static users and resource rights, may exist but be dynamically configured by fedd with additional resource rights and access keys, or may be created completely by fedd. Completely static projects are primarily used when a user wants to tie together his or her accounts on multiple testbeds that do not bar that behavior, but do not run fedd.

Whether to dynamically modify or dynamically create files depends significantly on testbed administration policy and how widespread and often federation is conducted. In Emulabs projects are intended as long-term entities, and creating and destroying them on a per-experiment basis may not appeal to some users. However, static projects require some administrator investment per-project.

Certificate Systems: DRAGON and ProtoGENI

Access controllers on these systems map the request into a flat space of X.509 certificates and keys that provide the identity for these identity based systems. Unlike the self-signed certificates representing fedids, these are full X.509 certificates used to establish a chain of trust. Different global users are mapped into certificates and keyes that match the access level intended.

Experiments

A federated experiment combines resources from multiple independently administered testbeds into a cohesive environment for experimentation. Earlier versions of fedd enforced a Master/Slave relationship with one emulab project exporting its environment to others. While this model remains viable, current fedd implementations allow compositions of the services and views of the facilities as well as their resources.

Currently experiments can be described in the same ns2 syntax that DETER and Emulab use, except that additional annotations for the testbed on which to place each node have been added. (The extension is set-node-testbed, and described elsewhere.) Most users will specify experiments in this format, initially. The testbed name here is a local string meaningful to the experimenter. Each fedd knows some set of such strings, and experimenters can also inform fedd of the appropriate mapping between local testbed name and fedd address to contact.

Fedd also understands experiments expressed in the topdl language that it uses to communicate with facilities.

An experimenter creates an experiment by presenting it to a fedd that knows him or her - usually the fedd on their local testbed - along with the master testbed name, local Emulab project to export and local mappings. That fedd will map the local user into the global (testbed, project, user) namespace and acquire and configure resources to create the experiment. A fedd may try different mappings of the experimenter into the global namespace in order to acquire all the resources. For example a local experimenter may be a member of several local Emulab projects that map into different global testbed-scoped projects.

Once created the user can gather information on the resources allocated to the experiment and where to access them. (Experiments are identified by fedids). The local name is mnemonic and can be used locally to access information about the experiment and to terminate it. The experimenter can suggest a name when requesting experiment creation.

In order to create a fedid, a key pair is created. The public and private keys for the fedid representing the experiment are returned in an encrypted channel from fedd to the requesting experimenter. The experimenter can use this key pair as a capability to grant access to the experiment to other users.

While an experiment exists, experimenters can access the nodes directly using the credentials passed back and can acquire information about the experiment's topology from fedd. Only the experimenter who created it or one given the capability to manipulate it can get this information.

Finally, fedd will terminate an experiment on behalf of the experimenter who created it, or one who has been granted control of it. Fedd is responsible for deallocating the running experiments and telling the testbeds that this experimenter is done with their access.

The fedd installation includes a command line program that allows users to create and delete federated experiments. We are currently developing other interfaces, including a SEER interface.

Experiment Descriptions

An experiment description is currently given in a slightly extended version of the ns2 dialect that is used for an Emulab experiment description. Specifically, the tb-set-node-testbed command has been added. Its syntax is:

tb-set-node-testbed node_reference testbed_name

The node_reference is the tcl variable containing the node and the testbed_string is a name meaningful to the fedd creating the experiment. The experiment creation interface has a mechanism that a requester can use to supply translations from testbed names to the URIs needed to contact that testbed's fedd for access.

Multiple segments of a federerated experiment can be embedded on the same testbed specifying the testbed_name as name/subname. In that syntax, name is the identifier of the testbed on which to embed the node and subname is a unique identifier within the federated experiment. All the nodes assigned to deter/attack will be in the same sub-experiment and that will be a different sub-experiment from nodes in deter/defend.

In addition, fedd understands the tb-set-default-failure-action command. This sets the default failure action for nodes in the experiment to one of fatal, nonfatal, or ignore. Those values have the same meaning as in standard Emulabs. Nodes that do not have their failure mode reset by the tb-set-node-failure-action command will user the explicit default. If no default is set, fatal is used.

Federated Experiment Creation

TO give an intuition for how fedd works, we present a sample experiment description and show how it would be turned into a federated experiment. The experiment description is:

# simple DETER topology for playing with SEER set ns [new Simulator] source tb_compat.tcl set a [$ns node] set b [$ns node] set c [$ns node] set d [$ns node] set e [$ns node] set deter_os "FC6-STD" set deter_hw "pc" set ucb_os "FC6-SMB" set ucb_hw "bvx2200" tb-set-node-os $a $deter_os tb-set-node-testbed $a "deter" tb-set-hardware $a $deter_hw tb-set-node-os $b $deter_os tb-set-node-testbed $b "deter" tb-set-hardware $b $deter_hw tb-set-node-os $c $ucb_os tb-set-node-testbed $c "ucb" tb-set-hardware $c $ucb_hw tb-set-node-os $d $ucb_os tb-set-node-testbed $d "ucb" tb-set-hardware $d $ucb_hw tb-set-node-os $e $ucb_os tb-set-node-testbed $e "ucb" tb-set-hardware $e $ucb_hw set link0 [ $ns duplex-link $a $b 100Mb 0ms DropTail] set link1 [ $ns duplex-link $c $b 100Mb 0ms DropTail] set lan0 [ $ns make-lan "$c $d $e" 100Mb 0ms ] $ns rtproto Static $ns run

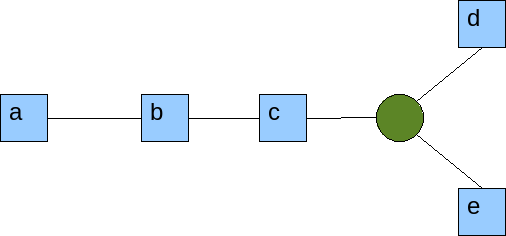

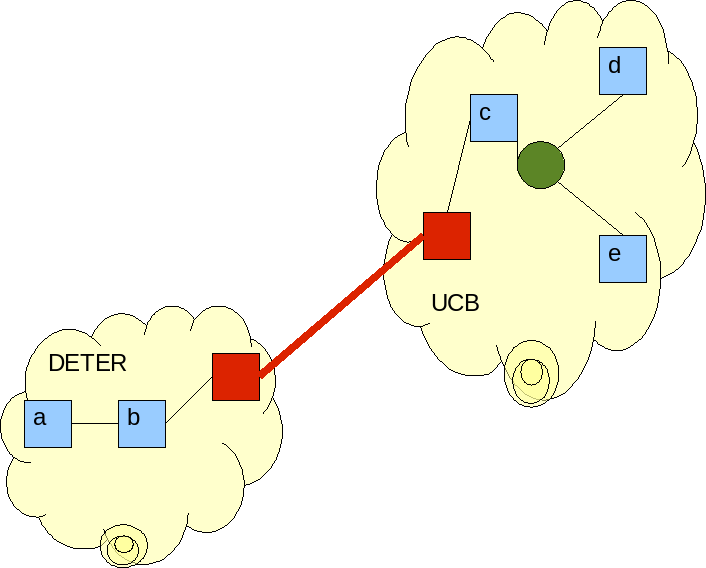

That experiment file generates the following topology:

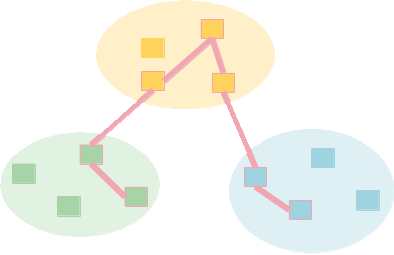

Each of the boxes is a node and the circle is a shared network. Considering the values of the set-node-testbed calls, the experiment will be split up like this:

The assignment of nodes to testbeds implicitly creates links (or networks) that must be connected across the wide area, and one of the key jobs of fedd is to create these connections. Fedd splits the experiment description into two descriptions (one for each testbed), adds extra nodes and startcmds to create the shared experiment, and swaps them in. The sub-experiments look something like these:

set ns [new Simulator] source tb_compat.tcl set a [$ns node] tb-set-hardware $a pc tb-set-node-os $a FC6-STD tb-set-node-tarfiles $a /usr /proj/emulab-ops//tarfiles/bwfed/fedkit.tgz # tb-set-node-testbed $a "deter" tb-set-node-startcmd $a "sudo -H /usr/local/federation/bin/make_hosts /proj/emulab-ops/exp/bwfed/tmp//hosts >& /tmp/federate \$USER" tb-set-node-failure-action $a "fatal" set b [$ns node] tb-set-hardware $b pc tb-set-node-os $b FC6-STD tb-set-node-tarfiles $b /usr /proj/emulab-ops//tarfiles/bwfed/fedkit.tgz # tb-set-node-testbed $b "deter" tb-set-node-startcmd $b "sudo -H /usr/local/federation/bin/make_hosts /proj/emulab-ops/exp/bwfed/tmp//hosts >& /tmp/federate \$USER" set control [$ns node] tb-set-hardware $control pc tb-set-node-os $control FC6-STD tb-set-node-tarfiles $control /usr /proj/emulab-ops//tarfiles/bwfed/fedkit.tgz # tb-set-node-testbed $control "deter" tb-set-node-startcmd $control "sudo -H /usr/local/federation/bin/make_hosts /proj/emulab-ops/exp/bwfed/tmp//hosts >& /tmp/federate \$USER " tb-set-node-failure-action $control "fatal" # Link establishment and parameter setting set link0 [$ns duplex-link $a $b 100000kb 0.0ms DropTail] tb-set-ip-link $a $link0 10.1.1.2 tb-set-ip-link $b $link0 10.1.1.3 # federation gateway set ucbtunnel0 [$ns node ] tb-set-hardware $ucbtunnel0 pc3000_tunnel tb-set-node-os $ucbtunnel0 FBSD7-TVF tb-set-node-startcmd $ucbtunnel0 "sudo -H /usr/local/federation/bin/fed-tun.pl -f /proj/emulab-ops/exp/bwfed/tmp/`hostname`.gw.conf >& /tmp/bridge.log" tb-set-node-tarfiles $ucbtunnel0 /usr/ /proj/emulab-ops//tarfiles/bwfed/fedkit.tgz # Link establishment and parameter setting set link1 [$ns duplex-link $ucbtunnel0 $b 100000kb 0.0ms DropTail] tb-set-ip-link $ucbtunnel0 $link1 10.1.3.2 tb-set-ip-link $b $link1 10.1.3.3 $ns rtproto Session $ns run

set ns [new Simulator] source tb_compat.tcl set c [$ns node] tb-set-hardware $c pc tb-set-node-os $c FC6-SMB tb-set-node-tarfiles $c /usr /proj/Deter//tarfiles/bwfed/fedkit.tgz # tb-set-node-testbed $c "ucb" tb-set-node-startcmd $c "sudo -H /bin/sh /usr/local/federation/bin/federate.sh >& /tmp/startup \$USER " tb-set-node-failure-action $c "fatal" set d [$ns node] tb-set-hardware $d pc tb-set-node-os $d FC6-SMB tb-set-node-tarfiles $d /usr /proj/Deter//tarfiles/bwfed/fedkit.tgz # tb-set-node-testbed $d "ucb" tb-set-node-startcmd $d "sudo -H /bin/sh /usr/local/federation/bin/federate.sh >& /tmp/startup \$USER " tb-set-node-failure-action $d "fatal" set e [$ns node] tb-set-hardware $e pc tb-set-node-os $e FC6-SMB tb-set-node-tarfiles $e /usr /proj/Deter//tarfiles/bwfed/fedkit.tgz # tb-set-node-testbed $e "ucb" tb-set-node-startcmd $e "sudo -H /bin/sh /usr/local/federation/bin/federate.sh >& /tmp/startup \$USER" tb-set-node-failure-action $e "fatal" set f [$ns node] tb-set-hardware $f pc tb-set-node-os $f FC6-SMB tb-set-node-tarfiles $f /usr /proj/Deter//tarfiles/bwfed/fedkit.tgz # tb-set-node-testbed $f "ucb" tb-set-node-startcmd $f "sudo -H /bin/sh /usr/local/federation/bin/federate.sh >& /tmp/startup \$USER" tb-set-node-failure-action $f "fatal" # Create LAN set lan0 [$ns make-lan "$c $d $e " 100000kb 0.0ms ] # Set LAN/Node parameters tb-set-ip-lan $c $lan0 10.1.2.2 tb-set-lan-simplex-params $lan0 $c 0.0ms 100000kb 0 0.0ms 100000kb 0 # Set LAN/Node parameters tb-set-ip-lan $d $lan0 10.1.2.3 tb-set-lan-simplex-params $lan0 $d 0.0ms 100000kb 0 0.0ms 100000kb 0 # Set LAN/Node parameters tb-set-ip-lan $e $lan0 10.1.2.4 tb-set-lan-simplex-params $lan0 $e 0.0ms 100000kb 0 0.0ms 100000kb 0 # federation gateway set detertunnel0 [$ns node ] tb-set-hardware $detertunnel0 pc3000_tunnel tb-set-node-os $detertunnel0 FBSD7-TVF tb-set-node-startcmd $detertunnel0 "sudo -H /usr/local/federation/bin/fed-tun.pl -f /proj/Deter/exp/bwfed/tmp/`hostname`.gw.conf >& /tmp/bridge.log" tb-set-node-tarfiles $detertunnel0 /usr/ /proj/Deter//tarfiles/bwfed/fedkit.tgz # Link establishment and parameter setting set link1 [$ns duplex-link $c $detertunnel0 100000kb 0.0ms DropTail] tb-set-ip-link $c $link1 10.1.3.2 tb-set-ip-link $detertunnel0 $link1 10.1.3.3 $ns rtproto Session $ns run

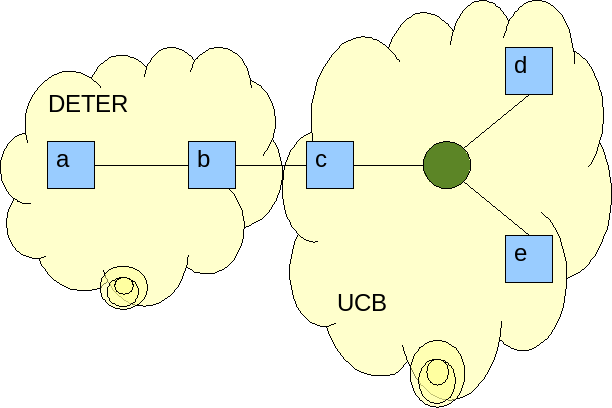

The resulting topology across two testbeds looks like this:

The red nodes are the connector nodes inserted by fedd. They both transfer experimental traffic verbatim and tunnel services, like a shared filesystem. Exactly what gets tunneled and how the connections are made is a function of the federation kit, the topic of the next section.

Experiment Services

An important part of the cohesive experiment framework created by the DFA is the web of services interconnecting the sub experiments. A service, in this context, is fairly liberally defined; it is any one of several pre-defined ways of sharing information statically or dynamically between sub-experiments. Static information includes the accounts to create on various machines or the hostnames to export to sub-experiments. Dynamic information includes mounting filesystems or connecting user experiment tools, like SEER.

Each service has an exporter the testbed that provides the service, and zero or more importers, testbeds that use the information. In addition, services can have attribute/value pairs as parameters.

The specific services currently supported by fedd are:

- hide_hosts

- By default, all hosts in the topology are visible across the experiment. This allows some hosts to be completely local. The hosts chosen are given by the hosts attribute.

- local_seer_control

- Adds a local seer control node to sub-experiments in the exporting testbed.

- project_export

- Exports the user configuration and filesystem named by the project attribute to the importing testbeds

- seer_master

- Adds a second seer controller to the exporting testbed that is capable of aggregating the inputs from several local seer masters. To create a seer instance that sees several sub experiments, create a local_seer_control in each and export a seer_master to all.

- SMB

- Export a set of SMB/CIFS filesystems.

- userconfig

- Export the account information from a given project or group. Currently accepts the project attribute for that purpose.

Service advertisement, provision, and specification is an ongoing area of research in federation, and we expect these specifications to become more detailed and useful as that research progresses.

The Federation Kit

The federation kit is the software used by fedd to connect testbeds and tunnel services from the master to the other testbeds. Currently we have a single fedkit (available from the download section) that provides SSH tunnels to connect experimental testbeds at the ethernet layer, and that tunnels the emulab event system and provides shared file service via SMB. That fedkit runs on any FreeBSD or Linux image that provides the SMB file system.

By splitting this function out, we intend to allow different installations of fedd to provide different interconnection and service tunneling function. Currently the DETER fedkit is the only federation kit in use, and fedd defaults its startcmd options for use with it.

The federation kit has 2 roles

- Configuring experiment nodes to use services, such as shared file systems.

- Configuring portal nodes to connect experiments

Fedkit on Experiment Nodes

On experiment nodes, the fedkit starts dynamic routing, and optionally configures user accounts and samba filesystems if they are in use. The system expects the following software to be available:

- quagga routing system (an old gated installation will also work)

- samba-client

- smbfs

Those are the linux package names; equivalent FreeBSD packages will also work. For software to be available is for it to be either installed or accessible using yum or apt-get. DETER nodes have a local repository for that purpose.

The fedkit is installed in /usr/local/federation and when run places a log in /tmp/federate.

Services are initialized based on the contents of /usr/local/federation/etc/client.conf. Possible values include:

- ControlGateway

- The DNS name (or IP address) of the node that will forward services Hide: Do nat add this node to the node's view of the experiment. Used for multi-party experiments.

- PortalAlias

- A name that will be mapped to the same IP address as the control gateway. SEER in particular expects nodes with certain functions to have certain names.

- ProjectUser

- The local user under which to mount shared project directories

- ProjectName

- Project name to derive shared project directories from

- Service

- a string naming the services to initialize.

- SMBShare

- The name of the share to mount

Fedkit on Portal Nodes

On portal nodes the fedkit uses ssh to interconnect the segments and bridges traffic at layer 2. If the portal node is a Linux image it needs to have the bridge-tools package available. Like the fedkit on experiment nodes, it will attempt to load that software from repositories if it is not present.

The fedkit configures the portal based on the contents of a configuration file containing the following parameters:

- active

- a boolean. If true this portal will initiate ssh connections to its peer.

- nat_partner

- a boolean. If true the fedkit's peer is behind a network address translator. Not used yet.

- tunnelip

- a boolean. If true use the DETER system for binding external addresses.

- peer

- a string. A list of DNS names or IP addresses. Usually this is one value, the DNS name of the peer, but passive ends of NATted portals may use a list of addresses to establish routing.

- ssh_pubkey

- a string. A file in which the access controller has placed the ssh key shared by this portal and its peer. These are nonce keys discarded after the experiment ends.

- ssh_privkey

- a string. A file in which the access controller has placed the ssh key shared by this portal and its peer. These are nonce keys discarded after the experiment ends.

The passive portal node establishes routing connectivity to the active end, reconfigures the local sshd to allow link layer forwarding and to allow the active end to remotely configure it, and waits. The active end connects through ssh, establishes a link layer forwarding tunnel and bridges that to the experimental interface. It also forwards ports to connect experiment services.

Interfaces to fedd

The fedd interfaces are completely specified in WDSL and SOAP bindings. For simplicity and backward compatibility, fedd can export the same interfaces over XMLRPC, where the message formats are trivial translations.

All fedd accesses are through HTTP over SSL. Fedd can support traditional SSL CA chaining for access control, but the intention is to use self-signed certificates representing fedids. The fedid semantics are always enforced.

There are also several internal interfaces also specified in WSDL/SOAP and accessible through XMLRPC used to simplify the distributed implementation of fedd. You can read about those in the development section?.

Attachments (10)

-

example_topology.png (5.8 KB) - added by 11 years ago.

Simple topology to federate

-

testbeds.png (20.7 KB) - added by 11 years ago.

Breakdown of topology into testbeds

-

federated_testbeds.png (24.9 KB) - added by 11 years ago.

Federation including portals

-

Full-Fed-Arch.png (246.7 KB) - added by 11 years ago.

Architecture diagram

-

federated_experiment.png (24.0 KB) - added by 11 years ago.

A federated experiment

-

practical_federation.png (106.9 KB) - added by 11 years ago.

Federation of different resources

-

DEFT_federation.png (281.3 KB) - added by 11 years ago.

Real Federation Example

-

multiparty.png (92.5 KB) - added by 11 years ago.

Multiparty layout

-

defend3.png (137.2 KB) - added by 11 years ago.

multiparty experiment controls

-

federation.png (16.1 KB) - added by 11 years ago.

Testbed diagram

Download all attachments as: .zip